An Introduction to Web Crawling Tools

Web crawling tools, also known as spiders or web scrapers, are essential for businesses looking to extract valuable data from websites for analysis and data mining purposes. These tools have a wide range of applications, from market research to search engine optimization (SEO). They collect data from various public sources and present it in a structured and usable format. By using web crawling tools, companies can keep track of news, social media, images, articles, competitors, and much more.

25 Best Web Crawling Tools to extract data from websites quickly

Scrapy

Scrapy is a popular open-source Python-based web crawling framework that allows developers to create scalable web crawlers. It offers a comprehensive set of features that make it easier to implement web crawlers and extract data from websites. Scrapy is asynchronous, meaning that it doesn't make requests one at a time but in parallel, resulting in efficient crawling. As a well-established web crawling tool, Scrapy is suitable for large-scale web scraping projects.

Key Features

- It generates feed exports in formats such as JSON, CSV, and XML.

- It has built-in support for selecting and extracting data from sources either by XPath or CSS expressions.

- It allows extracting data from the web pages automatically using spiders.

- It is fast and powerful, with a scalable and fault-tolerant architecture.

- It is easily extensible, with a plug-in system and a rich API.

- It is portable, running on Linux, Windows, Mac and BSD.

Pricing

- It is a free tool.

ParseHub

ParseHub is a web crawler tool that can collect data from websites that use AJAX technology, JavaScript, cookies, and more. Its machine-learning technology can read, analyze, and then transform web documents into relevant data. ParseHub's desktop application supports Windows, Mac OS X, and Linux operating systems. By offering a user-friendly interface, ParseHub is designed for non-programmers who want to extract data from websites.

Key Features

- It can scrape dynamic websites that use AJAX, JavaScript, infinite scroll, pagination, drop-downs, log-ins and other elements.

- It is easy to use and does not require coding skills.

- It is cloud-based and can store data on its servers.

- It supports IP rotation, scheduled collection, regular expressions, API and web-hooks.

- It can export data in JSON and Excel formats.

Pricing

- ParseHub has both free and paid plans. The prices for paid plans start at $149 per month and offer upgraded project speeds, a higher limit on the number of pages scraped per run, and the ability to create more projects.

Octoparse

Octoparse is a client-based web crawling tool that allows users to extract web data into spreadsheets without the need for coding. With a point-and-click interface, Octoparse is specifically built for non-coders. Users can create their own web crawlers to gather data from any website, and Octoparse provides pre-built scrapers for popular websites like Amazon, eBay, and Twitter. The tool also offers advanced features like scheduled cloud extraction, data cleaning, and bypassing blocking with IP proxy servers.

Key Features

- Point-and-click interface: You can easily select the web elements you want to scrape by clicking on them, and Octoparse will automatically identify the data patterns and extract the data for you.

- Advanced mode: You can customize your scraping tasks with various actions, such as entering text, clicking buttons, scrolling pages, looping through lists, etc. You can also use XPath or RegEx to locate the data precisely.

- Cloud service: You can run your scraping tasks on Octoparse’s cloud servers 24/7, and store your data in the cloud platform. You can also schedule your tasks and use automatic IP rotation to avoid being blocked by websites.

- API: You can access your data via API and integrate it with other applications or platforms. You can also turn any data into custom APIs with Octoparse.

Pricing

- It has both free and paid plans. The paid plans start at $89/month.

WebHarvy

WebHarvy is a point-and-click web scraping software designed for non-programmers. It can automatically scrape text, images, URLs, and emails from websites and save them in various formats, such as XML, CSV, JSON, or TSV. WebHarvy also supports anonymous crawling and handling dynamic websites by utilizing proxy servers or VPN services to access target websites.

Key Features

- Point-and-click interface for selecting data without coding or scripting

- Multiple-page mining with automatic crawling and scraping

- Category scraping for scraping data from similar pages or listings

- Image downloading from product details pages of e-commerce websites

- Automatic pattern detection for scraping lists or tables without extra configuration

- Keyword-based extraction by submitting input keywords to search forms

- Regular expressions for more flexibility and control over scraping

- Automated browser interaction for performing tasks like clicking links, selecting options, scrolling, and more

Pricing

- WebHarvy is a web scraping software that has a one-time license fee.

- Their license pricing starts at $139 for a year.

Beautiful Soup

Beautiful Soup is an open-source Python library used for parsing HTML and XML documents. It creates a parse tree that makes it easier to extract data from the web. Although not as fast as Scrapy, Beautiful Soup is mainly praised for its ease of use and community support for when issues arise.

Key Features

- Parsing: You can use Beautiful Soup with various parsers, such as html.parser, lxml, html5lib, etc. to parse different types of web documents.

- Navigating: You can navigate the parse tree using Pythonic methods and attributes, such as find(), find_all(), select(), .children, .parent, .next_sibling, etc.

- Searching: You can search the parse tree using filters, such as tag names, attributes, text, CSS selectors, regular expressions, etc. to find the elements you want.

- Modifying: You can modify the parse tree by adding, deleting, replacing, or editing the elements and their attributes.

Pricing

Beautiful Soup is a free and open-source library that you can install using pip.

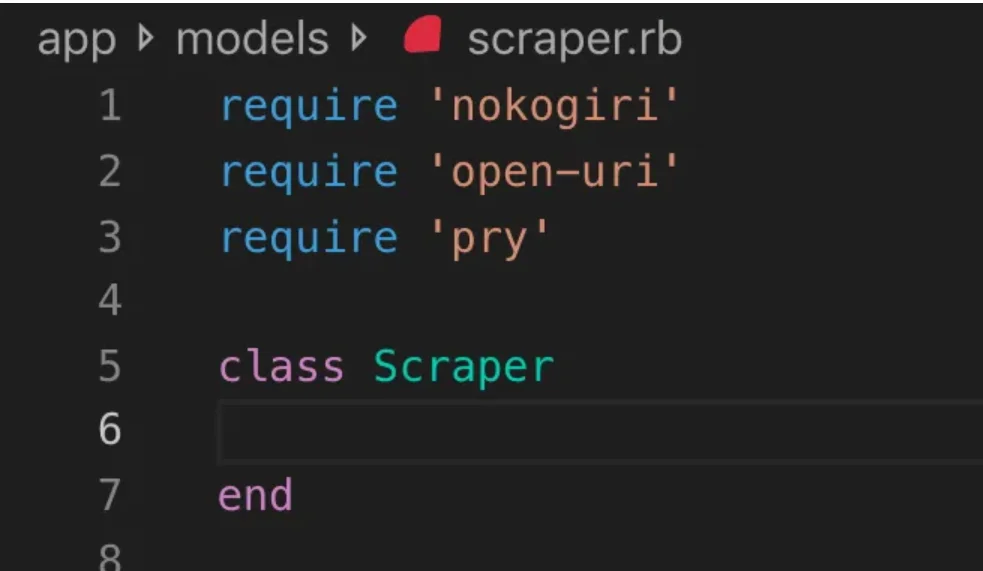

Nokogiri

Nokogiri is a web crawler tool that makes it easy to parse HTML and XML documents using Ruby, a programming language that is beginner-friendly in web development. Nokogiri relies on native parsers such as C's libxml2 and Java's xerces, making it a powerful tool for extracting data from websites. It is well-suited for web developers who want to work with a Ruby-based web crawling library.

Key Features

- DOM Parser for XML, HTML4, and HTML5

- SAX Parser for XML and HTML4

- Push Parser for XML and HTML4

- Document search via XPath 1.0

- Document search via CSS3 selectors, with some jquery-like extensions

- XSD Schema validation

- XSLT transformation

- “Builder” DSL for XML and HTML documents

Pricing

- Nokogiri is an open source project that is free to use.

Zyte (Formerly Scrapinghub)

Zyte (formerly Scrapinghub) is a cloud-based data extraction tool that helps thousands of developers fetch valuable data from websites. Its open-source visual scraping tool allows users to scrape websites without any programming knowledge. Zyte uses Crawlera, a smart proxy rotator that supports bypassing bot counter-measures to crawl large or bot-protected sites easily, and it allows users to crawl from multiple IPs and locations without the pain of proxy management through a simple HTTP API.

Key Features

- Data on-demand: Provide websites and data requirements to Zyte, and they deliver the requested data on your schedule.

- Zyte API: Automatically fetches HTML from websites using the most efficient proxy and extraction configuration, allowing you to focus on data without technical concerns.

- Scrapy Cloud: Scalable hosting for your Scrapy spiders, featuring a user-friendly web interface for managing, monitoring, and controlling your crawlers, complete with monitoring, logging, and data QA tools.

- Automatic data extraction API: Access web data instantly through Zyte's AI-powered extraction API, delivering quality structured data quickly. Onboarding new sources becomes simpler with this patented technology.

Pricing

Zyte has a flexible pricing model that depends on the complexity and volume of the data you need. You can choose from three plans:

- Developer: $49/month for 250K requests

- Business: $299/month for 2M requests

- Enterprise: Custom pricing for 10M+ requests

- You can also try Zyte for free with 10K requests per month.

HTTrack

HTTrack is a free and open-source web crawling tool that allows users to download entire websites or specific web pages to their local device for offline browsing. It offers a command-line interface and can be used on Windows, Linux, and Unix systems.

Key Features

- It preserves the original site’s relative link-structure.

- It can update an existing mirrored site and resume interrupted downloads.

- It is fully configurable and has an integrated help system.

- It supports various platforms such as Windows, Linux, OSX, Android, etc.

- It has a command line version and a graphical user interface version.

Pricing

- HTTrack is free software licensed under the GNU GPL.

Apache Nutch

Apache Nutch is an extensible open-source web crawler often used in fields like data analysis. It can fetch content through protocols such as HTTPS, HTTP, or FTP and extract textual information from document formats like HTML, PDF, RSS, and ATOM.

Key Features

- It is based on Apache Hadoop data structures, which are great for batch processing large data volumes.

- It has a highly modular architecture, allowing developers to create plug-ins for media-type parsing, data retrieval, querying and clustering.

- It supports various platforms such as Windows, Linux, OSX, Android, etc.

- It has a command line version and a graphical user interface version.

- It integrates with Apache Tika for parsing, Apache Solr and Elasticsearch for indexing, and Apache HBase for storage.

Pricing

- Apache Nutch is free software licensed under the Apache License 2.0.

Helium Scraper

Helium Scraper is a visual web data crawling tool that can be customized and controlled by users without the need for coding. It offers advanced features like proxy rotation, fast extraction, and support for multiple data formats such as Excel, CSV, MS Access, MySQL, MSSQL, XML, or JSON.

Key Features

- Fast Extraction: Automatically delegate extraction tasks to separate browsers

- Big Data: SQLite database can hold up to 140 terabytes

- Database Generation: Table relations are generated based on extracted data

- SQL Generation: Quickly join and filter tables for exporting or for input data

- API Calling: Integrate web scraping and API calling into a single project

- Text Manipulation: Generate functions to match, split or replace extracted text

- JavaScript Support: Inject and run custom JavaScript code on any website

- Proxy Rotation: Enter a list of proxies and rotate them at any given interval

- Similar Elements Detection: Detects similar elements from one or two samples

- List Detection: Automatically detect lists and table rows on websites

- Data Exporting: Export data to CSV, Excel, XML, JSON, or SQLite

- Scheduling: Launchable from the command line or Windows Task Scheduler

Pricing

- The basic license costs $99 per user.

Content Grabber (Sequentum)

Content Grabber is a web crawling software targeted at enterprises, allowing users to create stand-alone web crawling agents. It offers advanced features like integration with third-party data analytics or reporting applications, powerful scripting editing, and debugging interfaces, and support for exporting data to Excel reports, XML, CSV, and most databases.

Key Features

- Easy to use point and click interface: Automatically detect actions based on HTML elements

- Robust API: Supports easy drag-and-drop integration with existing data pipelines

- Customization: Customize your scraping agents with common coding languages like Python, C#, JavaScript, Regular Expressions

- Integration: Integrate third-party AI, ML, NLP libraries, or APIs for data enrichment

- Reliability & Scale: Keep infrastructure costs down while enjoying real-time monitoring of end-to-end operations

- Legal Compliance: Decrease your liability and mitigate risk associated with costly lawsuits and regulatory fines

- Data Exporting: Export data to any format and deliver to any endpoint

- Scheduling: Launch your scraping agents from the command line or Windows Task Scheduler

Pricing

- The basic license costs $27,500 per year and allows you to use the software on one computer.

Cyotek WebCopy

Cyotek WebCopy is a free website crawler that allows users to copy partial or full websites locally into their hard disk for offline reference. It can detect and follow links within a website and automatically remap links to match the local path. However, WebCopy does not include a virtual DOM or any form of JavaScript parsing, so it may not correctly handle dynamic website layouts due to heavy use of JavaScript.

Key Features

- Easy to use point-and-click interface with automatic action detection based on HTML elements

- Robust API for seamless integration with existing data pipelines through simple drag-and-drop functionality

- Customization options using popular coding languages such as Python, C#, JavaScript, and Regular Expressions to tailor scraping agents to specific needs

- Integration capabilities with third-party AI, ML, NLP libraries, or APIs to enrich scraped data

- Reliable and scalable infrastructure with real-time monitoring for cost-effective operations

- Legal compliance features to reduce liability and mitigate the risk of lawsuits and regulatory fines

- Data exporting to any desired format and delivery to various endpoints

- Scheduling options allow for launching scraping agents from the command line or Windows Task Scheduler

Pricing

- The basic license costs $27,500 per year and allows you to use the software on one computer.

80legs

80legs is a powerful web crawling tool that can be configured based on customized requirements. It supports fetching large amounts of data along with the option to download the extracted data instantly. The tool offers an API for users to create crawlers, manage data, and more. Some of its main features include scraper customization, IP servers for web scraping requests, and a JS-based app framework for configuring web crawls with custom behaviors.

Key Features

- Scalable and fast: You can crawl up to 2 billion pages per day with over 50,000 concurrent requests.

- Flexible and customizable: You can use your own code to control the crawling logic and data extraction, or use the built-in tools and templates.

Pricing

- You can choose from different pricing plans based on your needs, starting from $29/month for 100,000 URLs/crawl to $299/month for 10 million URLs/crawl.

Webhose.io

Webhose.io enables users to get real-time data by crawling online sources from all over the world and presenting it in various clean formats. This web crawler tool can crawl data and further extract keywords in different languages using multiple filters covering a wide array of sources. Users can save the scraped data in XML, JSON, and RSS formats and access the history data from its Archive. Webhose.io supports up to 80 languages with its crawling data results, enabling users to easily index and search the structured data crawled by the tool.

Key Features

- Multiple formats: You can get data in XML, JSON, RSS, or Excel formats.

- Structured results: You can get data that is normalized, enriched, and categorized according to your needs.

- Historical data: You can access archived data from the past 12 months or more.

- Wide coverage: You can get data from over a million sources in 80 languages and 240 countries.

- Variety of sources: You can get data from news sites, blogs, forums, message boards, comments, reviews, and more.

- Quick integration: You can integrate Webhose.io with your systems in minutes with a simple REST API.

Pricing

- It has a free plan that allows you to make 1000 requests per month at no cost. It also has custom plans that you can contact them for a quote.

Mozenda

Mozenda is a cloud-based web scraping software that allows users to extract web data without writing a single line of code. It automates the data extraction process and offers features like scheduled data extraction, data cleaning, and bypassing blocking with IP proxy servers. Mozenda is designed for businesses, with a user-friendly interface and powerful scraping capabilities.

Key Features

- Text analysis: You can extract and analyze text data from any website using natural language processing techniques.

- Image extraction: You can download and save images from web pages or extract image metadata such as size, format, resolution, etc.

- Disparate data collection: You can collect data from multiple sources and formats such as HTML, XML, JSON, RSS, etc.

- Document extraction: You can extract data from PDF, Word, Excel and other document types using optical character recognition (OCR) or text extraction methods.

- Email address extraction: You can find and extract email addresses from web pages or documents using regular expressions or pattern matching.

Pricing

- The paid plan starts at $99 per month.

UiPath

UiPath is a robotic process automation (RPA) software for free web scraping. It automates web and desktop data crawling out of most third-party apps. Compatible with Windows, UiPath can extract tabular and pattern-based data across multiple web pages. The software also offers built-in tools for further crawling and handling complex user interfaces.

Key Features

- Text analysis: Extract and analyze text data using natural language processing, regular expressions, and pattern matching for tasks like email address extraction.

- Image extraction: Download and save images from web pages, extract image metadata including size, format, and resolution.

- Disparate data collection: Gather data from various sources and formats like HTML, XML, JSON, RSS, with integration capabilities for connecting to other online services and APIs.

- Document extraction: Extract data from PDF, Word, Excel, and other document types using OCR or text extraction methods. Process and extract information across different document types and structures with document understanding features.

- Web automation: Automate web-based activities such as logging in, navigating through pages, filling forms, clicking buttons. Utilize the recorder feature to capture actions and generate automation scripts.

Pricing

- The paid plan starts at $420 per month.

OutWit Hub

OutWit Hub is a Firefox add-on with dozens of data extraction features to simplify users' web searches. This web crawler tool can browse through pages and store the extracted information in a proper format. OutWit Hub offers a single interface for scraping tiny or huge amounts of data per need and can create automatic agents to extract data from various websites in a matter of minutes.

Key Features

- View and export web content: You can view the links, documents, images, contacts, data tables, RSS feeds, email addresses, and other elements contained in a webpage. You can also export them to HTML, SQL, CSV, XML, JSON, or other formats.

- Organize data in tables and lists: You can sort, filter, group, and edit the data you collect in tables and lists. You can also use multiple criteria to select the data you want to extract.

- Set up automated functions: You can use the scraper feature to create custom scrapers that can extract data from any website using simple or advanced commands. You can also use the macro feature to automate web browsing and scraping tasks.

- Generate queries and URLs: You can use the query feature to generate queries based on keywords or patterns. You can also use the URL feature to generate URLs based on patterns or parameters.

Pricing

- The Light license is free and fully operational, but it doesn’t include the automation features and limits the extraction to one or few hundred rows, depending on the extractor.

- The Pro license costs $110 per year and includes all the features of the Light license plus the automation features and unlimited extraction.

Visual Scraper

Visual Scraper, apart from being a SaaS platform, also offers web scraping services such as data delivery services and creating software extractors for clients. This web crawling tool covers the entire life cycle of a crawler, from downloading, URL management to content extraction. It allows users to schedule projects to run at specific times or repeat sequences every minute, day, week, month, or year. Visual Scraper is ideal for users who want to extract news, updates, and forums frequently. However, the official website seems not to be updated now, and this information may not be up-to-date.

Key Features

- Easy to use interface

- Supports multiple data formats (CSV, JSON, XML, etc.)

- Supports pagination, AJAX, and dynamic websites

- Supports proxy servers and IP rotation

- Supports scheduling and automation

Pricing

- It has a free plan and paid plans starting from $39.99 per month.

Import.io

Import.io is a web scraping tool that allows users to import data from a specific web page and export it to CSV without writing any code. It can easily scrape thousands of web pages in minutes and build 1000+ APIs based on users' requirements. Import.io integrates web data into a user's app or website with just a few clicks, making web scraping easier.

Key Features

- Point and click selection and training

- Authenticated and interactive extraction

- Image downloads and screenshots

- Premium proxies and country-specific extractors

- CSV, Excel, JSON output and API access

- Data quality SLA and reporting

- E-mail, ticket, chat and phone support

Pricing

- Starter: $199 per month for 5,000 queries

Dexi.io

Dexi.io is a browser-based web crawler that allows users to scrape data based on their browser from any website and provides three types of robots to create a scraping task – Extractor, Crawler, and Pipes. The freeware provides anonymous web proxy servers, and the extracted data will be hosted on Dexi.io's servers for two weeks before the data is archived, or users can directly export the extracted data to JSON or CSV files. It offers paid services for users who require real-time data extraction.

Key Features

- Point and click selection and training

- Authenticated and interactive extraction

- Image downloads and screenshots

- Premium proxies and country-specific extractors

- CSV, Excel, JSON output and API access

- Data quality SLA and reporting

- E-mail, ticket, chat and phone support

Pricing

- Standard: $119 per month or $1,950 per year for 1 worker

Puppeteer

Puppeteer is a Node library developed by Google, providing an API for programmers to control Chrome or Chromium over the DevTools Protocol. It enables users to build a web scraping tool with Puppeteer and Node.js. Puppeteer can be utilized for various purposes such as taking screenshots or generating PDFs of web pages, automating form submissions/data input, and creating tools for automated testing.

Key Features

- Generate screenshots and PDFs of web pages

- Crawl and scrape data from websites

- Automate form submission, UI testing, keyboard input, etc.

- Capture performance metrics and traces

- Test Chrome extensions

- Run in headless or headful mode

Pricing

- Puppeteer is free and open-source.

Crawler4j

Crawler4j is an open-source Java web crawler with a simple interface to crawl the web. It allows users to build multi-threaded crawlers while being efficient in memory usage. Crawler4j is well-suited for developers who want a straightforward and customizable Java-based web crawling solution.

Key Features

- It lets you specify which URLs should be crawled and which ones should be ignored using regular expressions.

- It lets you handle the downloaded pages and extract data from them.

- It respects the robots.txt protocol and avoids crawling pages that are disallowed.

- It can crawl HTML, images, and other file types.

- It can gather statistics and run multiple crawlers concurrently.

Pricing

- Crawler4j is an open source Java project that allows you to set up and run your own web crawlers easily.

Common Crawl

Common Crawl is a web crawler tool that provides an open corpus of web data for research, analysis, and education purposes.

Key Features

- It offers users access to web crawl data such as raw web page data, extracted metadata, and text, as well as the Common Crawl Index.

Pricing

- This free and publicly accessible web crawl data can be used by developers, researchers, and businesses for various data analysis tasks.

MechanicalSoup

MechanicalSoup is a Python library used for parsing websites, based on the Beautiful Soup library, with inspiration from the Mechanize library. It is great for storing cookies, following redirects, hyperlinks, and handling forms on a website.

Key Features

- MechanicalSoup offers a simple way to browse and extract data from websites without having to deal with complex programming tasks.

Pricing

- It is a free tool.

Node Crawler

Node Crawler is a popular and powerful package for crawling websites with the Node.js platform. It runs based on Cheerio and comes with many options to customize the way users crawl or scrape the web, including limiting the number of requests and time taken between them. Node Crawler is ideal for developers who prefer working with Node.js for their web crawling projects.

Key Features

- Easy to use

- Event-driven API

- Configurable retries and timeouts

- Automatic encoding detection

- Automatic cookie handling

- Automatic redirection handling

- Automatic gzip/deflate handling

Pricing

- It is a free tool.

Factors to Consider When Choosing a Web Crawling Tool

Pricing

Consider the pricing structure of the chosen tool and ensure that it is transparent, with no hidden costs. Opt for a company that offers a clear pricing model and provides detailed information on the features available.

Ease of Use

Choose a web crawling tool that is user-friendly and does not require extensive technical knowledge. Many tools offer point-and-click interfaces, making it easier for non-programmers to extract data from websites.

Scalability

Consider whether the web crawling tool can handle the volume of data you need to extract and whether it can grow with your business. Some tools are more suitable for small-scale projects, while others are designed for large-scale data extraction.

Data Quality and Accuracy

Ensure that the web crawling tool can clean and organize the extracted data in a usable format. Data quality is crucial for accurate analysis, so choose a tool that provides efficient data cleaning and organization features.

Customer Support

Choose a web crawling tool with responsive and helpful customer support to assist you when issues arise. Test the customer support by contacting them and noting how long it takes for them to respond before making an informed decision.

Conclusion

Web crawling tools are essential for businesses that want to extract valuable data from websites for various purposes, such as market research, SEO, and competitive analysis. By considering factors such as pricing, ease of use, scalability, data quality and accuracy, and customer support, you can choose the right web crawling tool that suits your needs. The top 25 web crawling tools mentioned above cater to a range of users, from non-programmers to developers, ensuring that there is a suitable tool for everyone. You can also sign up for a 7-day free trial with Scalenut to optimize your website content and improve your ranking.

.webp)

.webp)