Key Highlights

- The llms.txt is a proposed standard that provides a clear, concise guide for large language models (LLMs) to understand your website content.

- It uses a precise format with Markdown files to offer AI quick access to your most important information, overcoming the context window limitations of complex HTML.

- Unlike robots.txt which blocks search engines, llms.txt is designed to help AI interpret the content of your site for better search results.

- This file is a key component of generative engine optimization (GEO), helping improve your visibility in AI-powered searches.

- Creating an llms.txt fileinvolves summarizing your site and linking to key documentation and URLs.

- You can find tools and plugins in a GitHub repository to help automate the creation process for various development environments.

What is Llms.txt?

As artificial intelligence changes the way people discover information, large language models are starting to act like the new search engines. To stand out, your website has to speak their language and cater to various use cases, not just appeal to human readers. That's where llms.txt comes in.

Think of it as a quick guidebook for AI. Instead of wandering through endless code and complicated navigation, a language model can open this file and find a clear, curated snapshot of what matters most on your site.

It's like leaving a trail of signposts that point directly to your most valuable pages. So, when you provide AI with this shortcut, you ensure that your content is accurately represented in generative search results. This small step can make a big difference in your visibility.

What Makes Llms.txt Valuable for Website Owners?

For website owners, llms.txt isn’t just a technical file; it’s a way to shape how AI "sees” your site. Large language models have a critical limitation of limited memory, so they can't take in an entire website all at once.

Llms.txt is a cheat sheet that gives AI the essentials without overwhelming it.

Let us now see all the reasons why including an llms.txt file in the website is vital for owners -

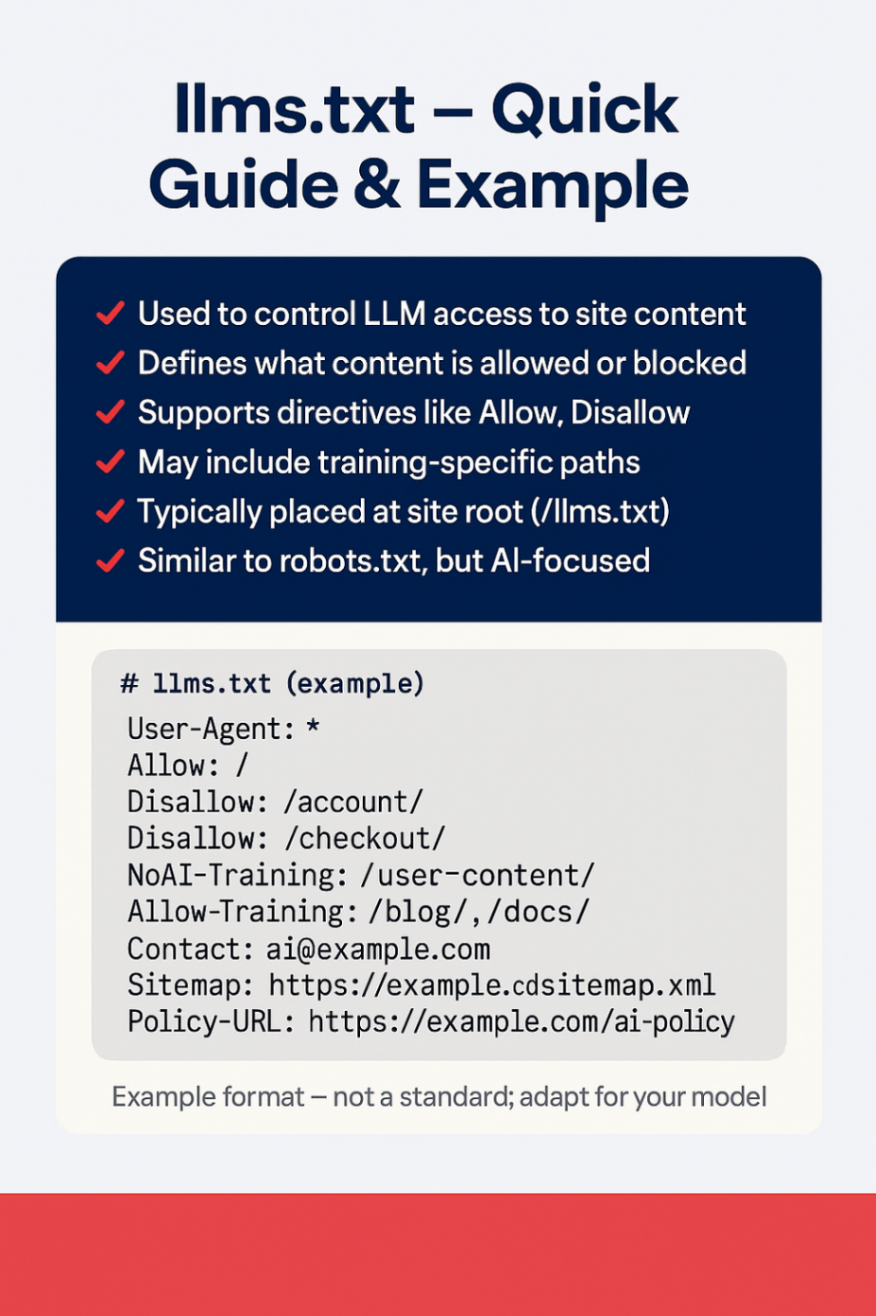

What is a good llms.txt example?

A good llms.txt file clearly defines which parts of your website AI models can access and use for training.

It strikes a balance between openness and privacy by allowing essential content while restricting access to sensitive areas. Including directives, contact info, and policy links helps models interact correctly with your site.

Let's look at a practical example to understand the format and structure.

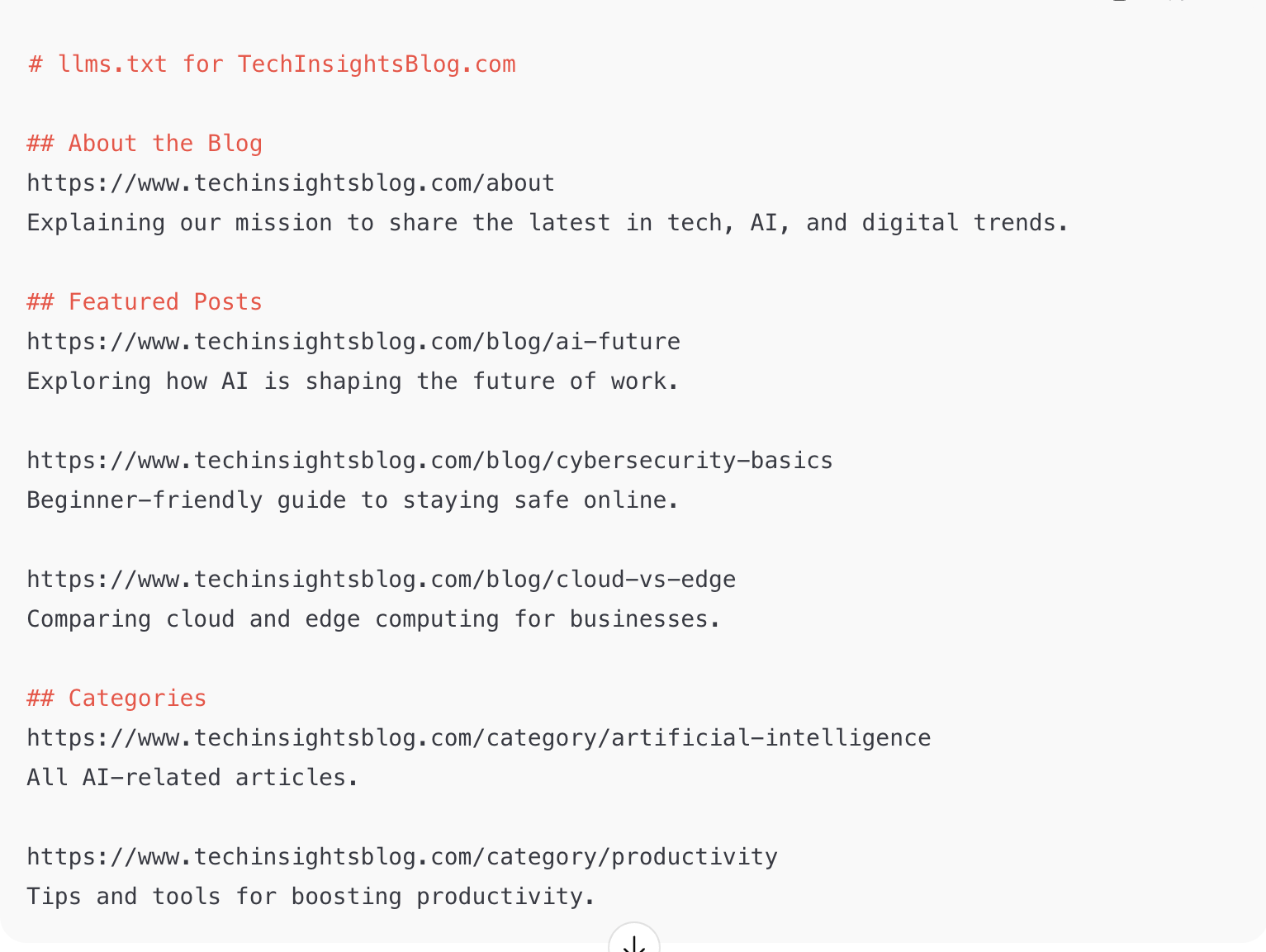

This structure ensures that an AI can quickly identify the purpose of your site and find the most relevant docs and URLs without having to process unnecessary code.

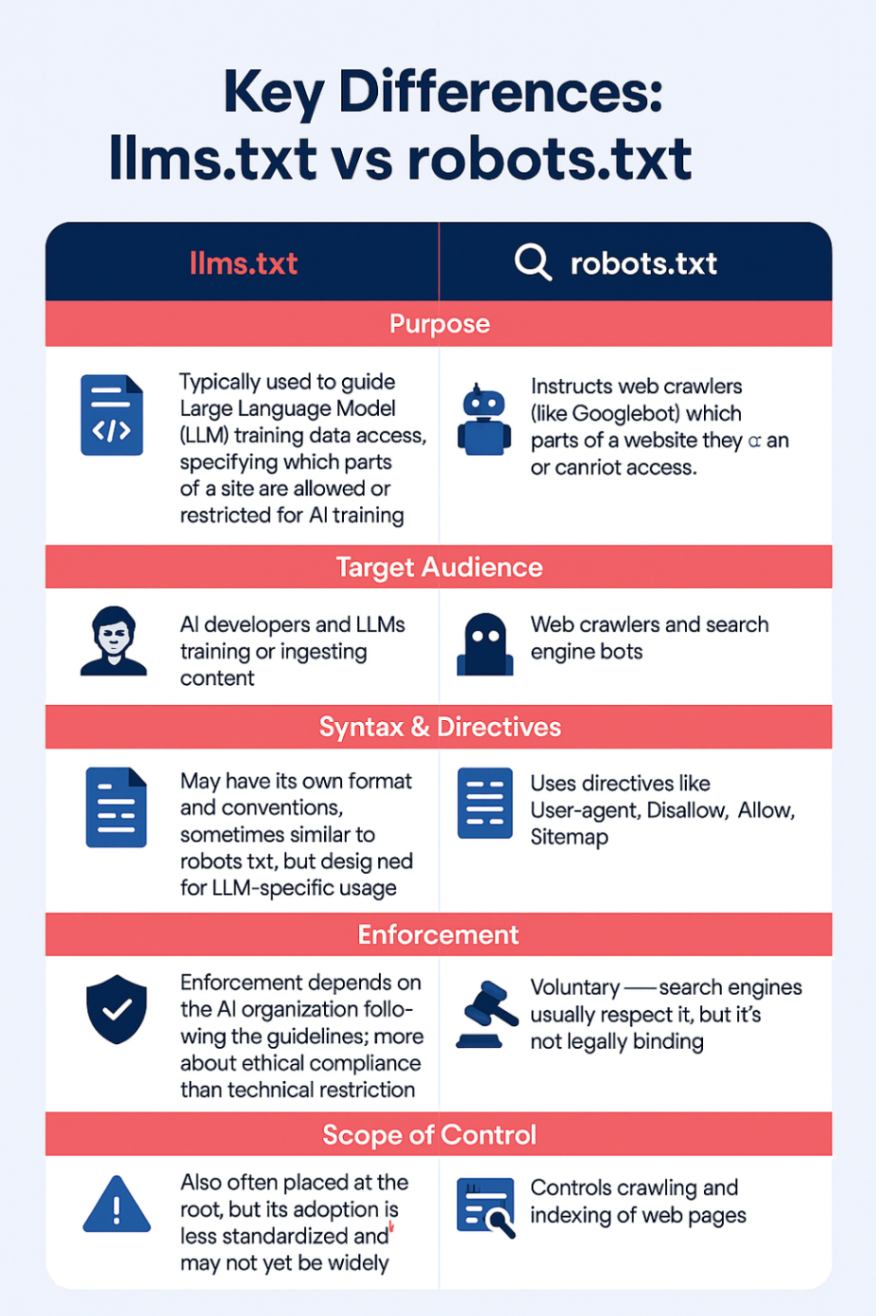

What are the key differences between llms.txt and robots.txt?

You might think llms.txt is just a new version of robots.txt, but they play very different roles for different purposes. While robots.txt tells search engines what not to crawl, llms.txt guides AI on how to understand your content. One restricts access, the other provides context.

Let's break down their key differences.

How should you prepare to create your Llms.txt file?

First, you need to identify the most crucial website information you want an AI to understand. This could be your core mission, key product docs, or essential guides. The goal is to distill your site down to its most critical parts.

Since the proposed standard uses plain text and markdown files, you won't need to worry about complex HTML. This simplicity is key to avoiding context window limitations and is a significant first step in your AI search engine optimization strategy. Now, let's get into the specifics of what to include and how to format it.

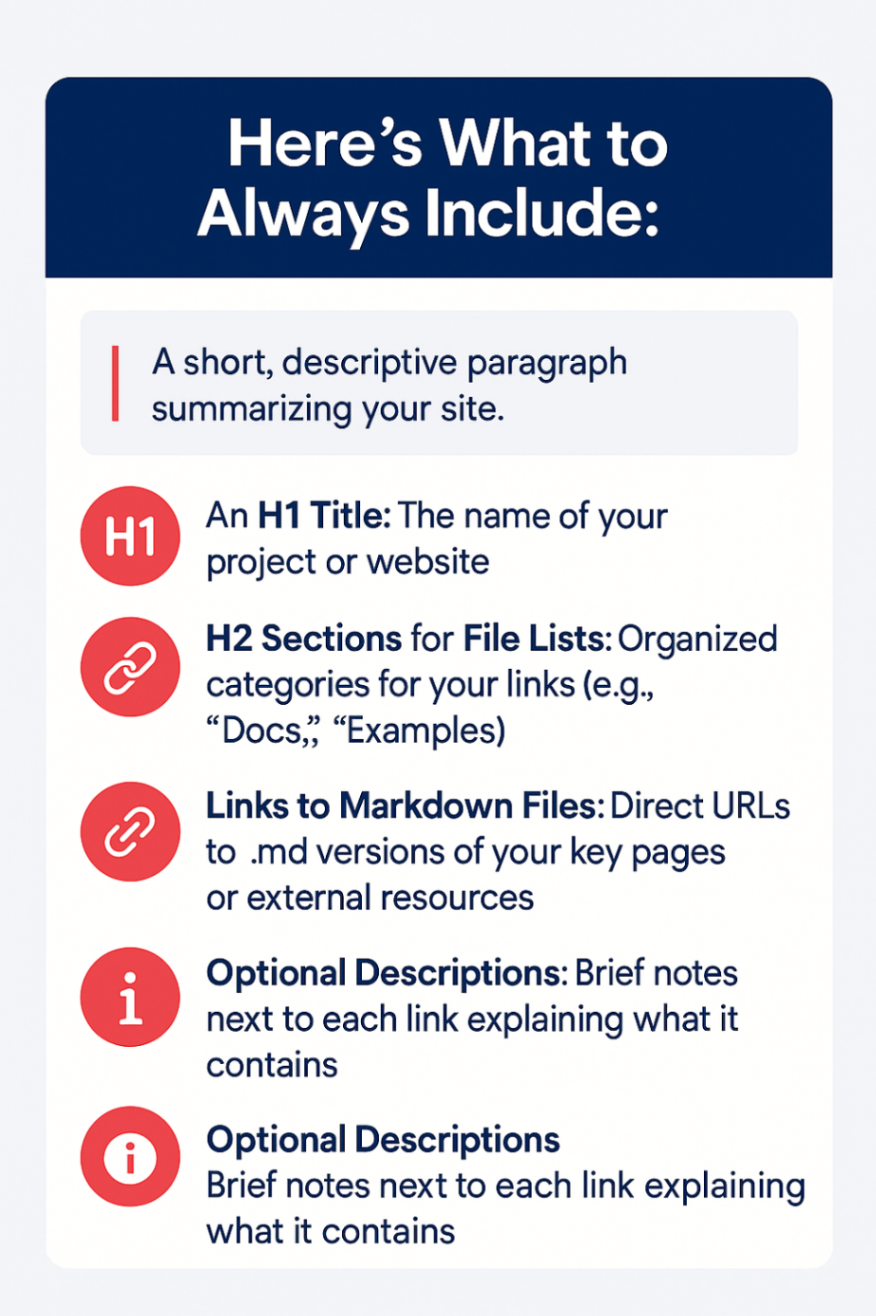

What is the essential Information to include?

The official specification outlines a clear and structured approach. Begin with a main heading that includes your site's name, followed by a summary. Then, you can add sections with links to more detailed documentation.

What are some of the Llms.txt best practices for formatting and structure?

A well-structured llms.txt helps AI understand your site quickly and accurately. Keep it clear, consistent, and easy to parse.

Here are some quick tips:

- Keep it simple: Plain text + basic Markdown. No fancy syntax.

- Follow the order: H1 title → summary → links.

- Descriptive links: Hyperlinks should clearly indicate their purpose.

- Optional section: Less critical links go under ## optional.

- Clean URLs: Link straight to .md files whenever possible.

How can I create an LLMS.txt file for my website?

Creating an Llms.txt file can be done in two main ways: manual creation or automatic generation. If your site is small or you want complete control over the content, writing the file by hand in a text editor is a great option. You can follow the format guidelines to craft a perfect summary of your site. This hands-on approach is ideal for ensuring every detail is just right for your search engine optimization goals.

For larger websites or those in complex development environments, automatic generation is often more practical. Various tools and plugins are available, many of which can be found in a public GitHub repository. These tools can crawl the content of your site and generate an Llms.txt file for you, saving time and effort. Now, let’s explore these two methods in more detail.

Manual Creation versus Automatic Generation

Choosing between manual creation and automatic generation for your llms.txt file depends on your resources and needs. Manual creation gives you the ultimate control. You can personally select every link and write every description, ensuring the AI gets the exact message you want to convey about your website content.

However, for larger sites, automatic generation is a lifesaver. Several tools, which you can often find in a GitHub repository, use programming techniques to scan your site and build the file for you. Some content management systems, like WordPress and Drupal, even have plugins that handle this process automatically.

Here’s a quick comparison:

- Manual Creation: Offers precise control but is time-consuming for large sites.

- Automatic Generation: Fast and efficient, ideal for sites with lots of pages or complex structures.

- Hybrid Approach: You can auto-generate a base file and then manually edit it for fine-tuning.

Placing llms.txt Correctly on Your Website

Once you've created your Llms.txt file, where you place it is crucial for it to be discovered. Just like the robots sitemap files, the Llms.txt file should be placed in the root directory of your website. This means it should be accessible at a URL like https://yourdomain.com/llms.txt.

Placing it in the root directory is the standard convention that search engines and other automated tools expect. It's the first place an AI bot will look when trying to find structured information about your site. A predictable URL structure makes it easy for any system to locate and parse the file.

If you place the file anywhere else, it likely won't be found. Sticking to the root directory ensures your carefully crafted docs and website information are visible to the AI systems you want to guide.

How to use llms.txt?

Using llms.txt lets you guide AI on how to access and interpret your site. It clearly communicates your content policy and helps ensure AI systems use your data correctly.

- Decide your policy: Specify which paths can or can’t be used for AI training and who to contact.

- Create the file: Save /Llms.txt in UTF-8 with clear, vendor-friendly directives

. - Host correctly: Place it at the root so it's publicly reachable (HTTP 200).

- Refer to sitemap & AI policy: Ensure clarity and completeness.

- Pair with other controls: Combine with robots.txt, meta tags, and headers, as adoption may vary.

Example -

When and how often should you update your llms.txt file?

Your llms.txt file should evolve in tandem with your website. Outdated information can mislead AI and reduce your visibility in generative search results.

Update your llms.txt whenever you make significant changes, such as:

- Adding major pages or sections

- Publishing key documentation or articles

- Restructuring site navigation or focus

- Removing outdated pages or documents

The frequency depends on your website: highly dynamic sites may require monthly updates, while others may only need quarterly revisions. Integrate llms.txt updates into your regular content workflow to ensure AI always has accurate, current information.

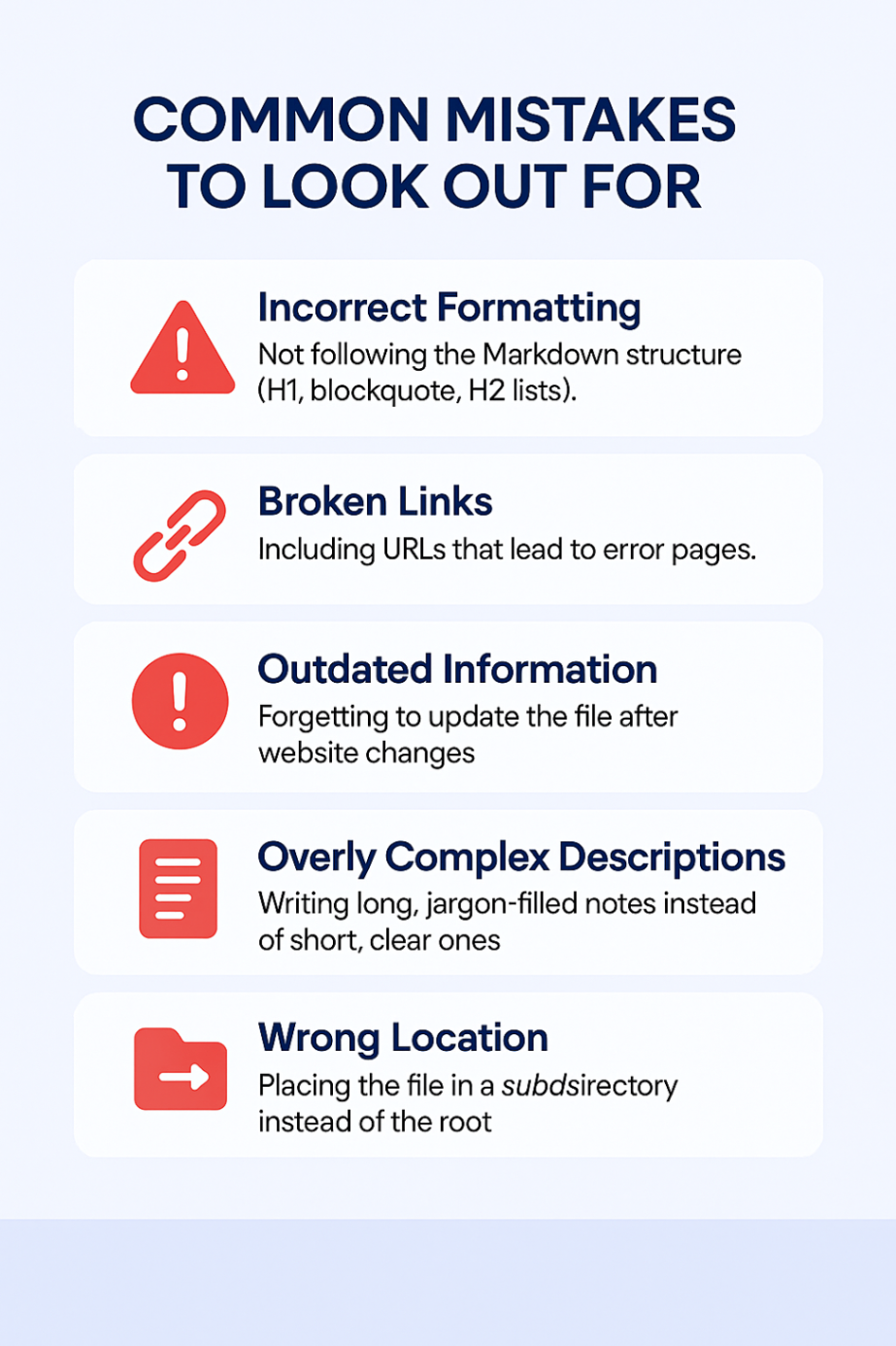

What are the common mistakes to avoid when creating a llms.txt?

Creating an Llms.txt file is simple, but a few common mistakes can make it ineffective. Avoiding these errors will ensure that AI models can properly read and use the information you provide about the content of your site.

Which sites use llms txt?

Right now, llms.txt (sometimes called LLM.txt or AI.txt in discussions) is still a proposed / experimental standard, not something that's officially adopted at scale like robots.txt.

Here’s what's happening in practice as of now:

- No major platforms (such as Google, OpenAI, and Anthropic) officially require it yet.

- Some early adopters and developers are experimenting with Llms.txt on their own sites, mainly in the tech and AI community, to make their content easier for LLMs to parse.

- Variants exist: You'll also see mentions of ai.txt or gpt.txt in discussions, with the same purpose, guiding AI models on what content to use.

- Industry chatter: Developers on GitHub and in AI forums are treating it as a next-gen robots.txt, but adoption is grassroots right now.

It's not widely deployed yet, but it’s being tested by documentation-heavy sites, open-source projects, and AI-focused blogs that want more control over how LLMs ingest their content.

Will Llms.txt be the future of GEO?

The Llms.txt file represents a significant step toward a more structured approach to generative engine optimization (GEO). While traditional search engine optimization has relied on signals like keywords and backlinks, GEO is about communicating directly with AI. This file provides a standardized, scientific method for telling an AI what your website content is about, which has been missing until now.

Its future as a core part of GEO depends on widespread adoption by both website owners and AI companies. However, it offers a clear competitive advantage by making content, including complex HTML pages, more accessible and understandable to large language models. As AI continues to influence search results, having an llms.txt file could become as standard as having a sitemap, helping to overcome context window limitations and secure better visibility.

Conclusion

In conclusion, understanding and implementing the llms.txt file can significantly enhance your website's interaction with AI and search engines. By taking the time to create an llms.txt file that accurately reflects your content and its purpose, you are not only ensuring better crawling and indexing but also positioning your site for future advancements in web technology and SEO. Regular updates and adherence to best practices will help maintain this crucial aspect of your digital presence. If you're ready to take your website to the next level, don't hesitate to reach out for a free consultation to explore how we can assist you in optimizing your llms.txt file and overall strategy.

Frequently Asked Questions

What does Llms.txt do?

Llms.txt guides AI on how to access and interpret your website. Unlike robots.txt, it doesn't block content. It provides context, outlines your AI usage policies, and helps ensure AI systems understand your site accurately and use your data appropriately.

What is the ideal Llms.txt file format?

The ideal llms.txt uses plain text with basic Markdown, starting with an H1 title and a summary blockquote. Links should be descriptive, less critical links under ## Optional and urls should point directly to clean files for clarity.

Can I automate the creation of my Llms.txt file?

Yes, you can automate the creation of your llms.txt file. Many tools use simple programming techniques for automatic generation, and you can find scripts in a GitHub repository. For content management systems, plugins are available that can scan your site and create the file for you, which is ideal for keeping it updated with AI.

How does Llms.txt impact AI content crawling?

The llms.txt file doesn't block AI web content crawling; it guides it. It provides a curated, simplified summary of your website content, helping AI models overcome their limited context windows. This enables them to process your information more accurately and efficiently, resulting in higher-quality responses tailored to your site.

What should I do if my Llms.txt isn’t being detected?

If your llms.txt file isn't being detected, first verify it's in the root directory of your website. Check for formatting errors and ensure the file permissions allow access. You can also add a reference to it in your robots.txt file to help search engines discover it.

Are llms.txt really helpful?

Yes, discussions on platforms like r/SEO suggest llms.txt is a promising tool for modern search engine optimization, especially in the context of Google Search. It provides a direct way to communicate with AI, which can improve how your website content is represented in generative search results. It's a proactive step to make your site AI-friendly.

Do I need to update my Llms.txt file regularly as my website changes?

Yes, regular updates are essential. You should update your llms.txt file whenever you make significant website changes, such as adding new pages or updating core information. This ensures that AI always has the most accurate representation of the content on your site, keeping your information up to date and relevant.

What information should be included in an llms.txt file?

Your llms.txt file should include a main title (H1), a brief summary of your website content in a blockquote, and organized sections (H2s) with links to your most important markdown files or pages. This structured information gives AI a clear and concise guide to your site.

What is a Llms.txt generator?

A llms.txt generator is a tool that creates a properly formatted llms.txt file for your website. It helps define AI usage policies, structure content, and ensure your site’s data is easily understandable by AI systems.

.webp)